Intro

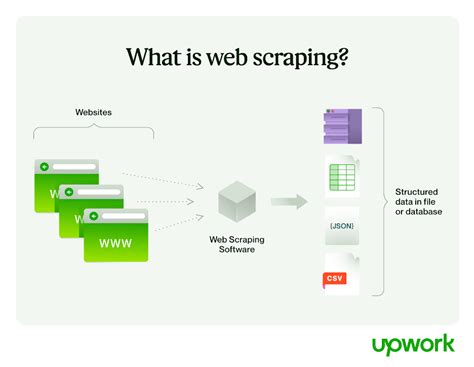

Automate data collection with Excels web scraping capabilities. Learn how to pull data from a website automatically using Excels built-in tools, including Web Queries and Power Query. Discover the benefits of web scraping, overcome common challenges, and master Excel formulas for seamless data extraction and integration.

Excel is a powerful spreadsheet software that can help you analyze and visualize data from various sources. One of the most useful features of Excel is its ability to pull data from a website automatically, saving you time and effort. In this article, we will explore the different ways to import data from a website into Excel, including using formulas, add-ins, and VBA macros.

Why Pull Data from a Website into Excel?

There are many reasons why you might want to import data from a website into Excel. Some common use cases include:

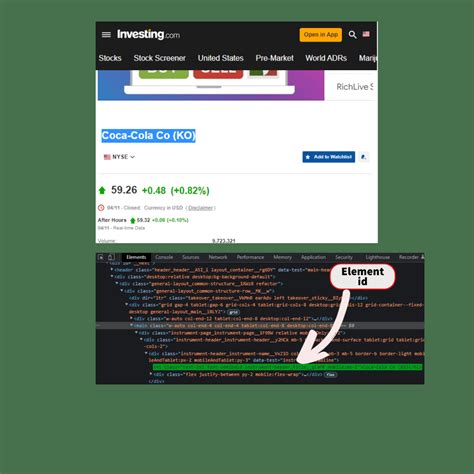

- Web scraping: Extracting data from a website that does not provide an API or other data export options.

- Monitoring stock prices: Retrieving stock prices or other financial data from websites like Yahoo Finance or Google Finance.

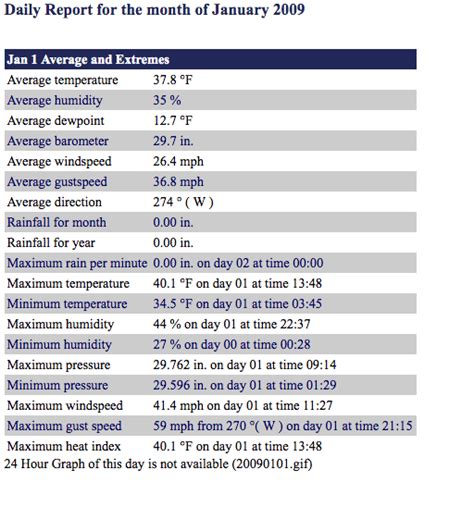

- Tracking weather data: Pulling weather forecasts or historical data from websites like Weather.com or OpenWeatherMap.

- Analyzing social media data: Importing data from social media platforms like Twitter or Facebook.

Using Formulas to Pull Data from a Website

Excel provides several formulas that can be used to import data from a website. Here are a few examples:

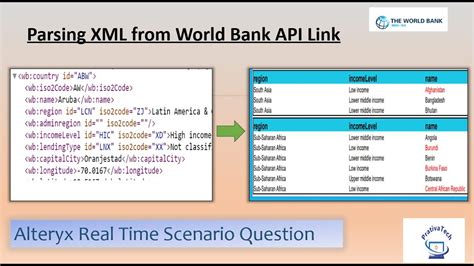

- WEBSERVICE: This formula allows you to retrieve data from a web service or API. You can use it to pull data from a website that provides an API endpoint.

- FILTERXML: This formula allows you to parse XML data from a website and extract specific elements.

- IMPORTHTML: This formula allows you to import HTML tables from a website.

Here's an example of how to use the WEBSERVICE formula to retrieve stock prices from Yahoo Finance:

=WEBSERVICE("https://query1.finance.yahoo.com/v7/finance/quote?symbols=AAPL")

This formula will return the current stock price of Apple (AAPL) in a JSON format.

Using Add-ins to Pull Data from a Website

There are several add-ins available for Excel that can help you import data from a website. Some popular options include:

- Power Query: This add-in provides a powerful set of tools for importing and transforming data from various sources, including websites.

- DataConnex: This add-in allows you to connect to various data sources, including websites, and import data into Excel.

- Zapier: This add-in allows you to automate data imports from various sources, including websites, using Zapier's API.

Here's an example of how to use Power Query to import data from a website:

- Go to the Data tab in Excel and click on "New Query".

- Select "From Other Sources" and then "From Web".

- Enter the URL of the website you want to import data from.

- Select the data you want to import and click "Load".

Using VBA Macros to Pull Data from a Website

VBA (Visual Basic for Applications) macros can be used to automate the process of importing data from a website. Here's an example of a VBA macro that retrieves data from a website using the MSXML2.XMLHTTP object:

Sub ImportDataFromWebsite()

Dim http As Object

Dim html As Object

Dim data As String

Set http = CreateObject("MSXML2.XMLHTTP")

http.Open "GET", "https://www.example.com/data", False

http.Send

data = http.responseText

' Parse the data using HTML or XML parsing libraries

' Import the data into Excel

End Sub

This macro sends a GET request to the specified URL and retrieves the HTML response. You can then parse the HTML data using libraries like HTMLAgilityPack or XML parsing libraries.

Best Practices for Pulling Data from a Website

When pulling data from a website, it's essential to follow best practices to avoid issues like data corruption, errors, or even website blocking. Here are some tips:

- Respect website terms of service: Make sure you have permission to scrape data from the website.

- Use a user agent string: Identify your bot or script using a user agent string to avoid being blocked.

- Handle errors and exceptions: Use error handling techniques to handle unexpected issues like website downtime or data format changes.

- Cache data: Cache data to reduce the load on the website and improve performance.

Gallery of Web Scraping Examples

Web Scraping Examples

Conclusion

In this article, we explored the different ways to pull data from a website into Excel, including using formulas, add-ins, and VBA macros. We also discussed best practices for web scraping, such as respecting website terms of service, using a user agent string, handling errors and exceptions, and caching data. By following these tips and techniques, you can automate the process of importing data from a website into Excel and unlock new insights and analysis opportunities.

FAQ

Q: Can I use Excel to pull data from a website that requires authentication? A: Yes, you can use Excel to pull data from a website that requires authentication using VBA macros or add-ins like Power Query.

Q: How often can I pull data from a website without getting blocked? A: The frequency at which you can pull data from a website without getting blocked depends on the website's terms of service and your usage pattern. It's essential to respect website terms of service and use a user agent string to avoid being blocked.

Q: Can I use Excel to pull data from a website that uses JavaScript? A: Yes, you can use Excel to pull data from a website that uses JavaScript using VBA macros or add-ins like Power Query.

Q: What are some common errors when pulling data from a website? A: Common errors when pulling data from a website include data corruption, errors, or website blocking due to excessive requests or unauthorized access.

Share Your Thoughts!

Have you ever tried pulling data from a website into Excel? What was your experience like? Share your thoughts and tips in the comments below!