Intro

Troubleshoot Scrape URL errors with ease! Discover 5 effective ways to fix Scrape URL errors, including optimizing crawl rates, resolving DNS issues, and addressing server connectivity problems. Boost website scraping efficiency with expert-approved solutions, overcoming obstacles like server overload, CAPTCHAs, and poorly formatted URLs.

In today's digital age, website scraping has become a common practice for gathering data, monitoring competitor activity, and performing market research. However, the process of scraping can be marred by errors, particularly the Scrape URL error. This error occurs when the scraper is unable to access or parse the URL of the webpage you're trying to scrape. If you're facing this issue, don't worry! In this article, we'll explore five ways to fix Scrape URL errors.

Understanding Scrape URL Errors

Scrape URL errors are typically triggered by issues related to the URL structure, website configuration, or scraper settings. Common causes include:

- Incorrect URL formatting

- Website restrictions on scraping

- Proxy server errors

- User agent issues

- JavaScript rendering problems

Causes and Symptoms

Before we dive into the solutions, it's essential to understand the causes and symptoms of Scrape URL errors. Some common symptoms include:

- Error messages indicating that the URL is invalid or cannot be accessed

- Failure to extract data from the webpage

- Webpage not loading or rendering correctly

- Scraper crashing or freezing

Fix 1: Verify URL Format and Structure

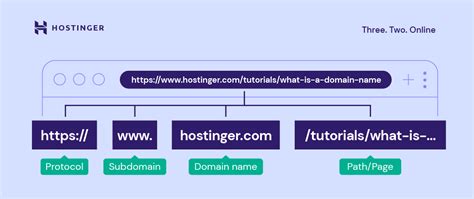

The first step in resolving Scrape URL errors is to verify the URL format and structure. Ensure that the URL is correctly formatted, including the protocol (http/https), domain, path, and query parameters.

- Check for any typos or errors in the URL

- Validate the URL using online tools or regex patterns

- Use a URL encoder to encode special characters

Best Practices for URL Formatting

To avoid Scrape URL errors, follow best practices for URL formatting:

- Use absolute URLs instead of relative URLs

- Specify the protocol (http/https) explicitly

- Avoid using special characters or encoded characters

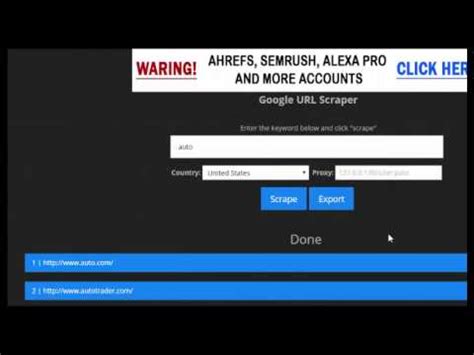

Fix 2: Configure User Agent and Proxy Settings

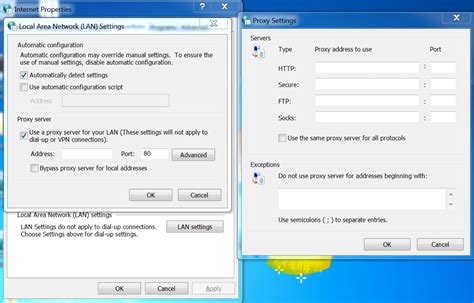

Another common cause of Scrape URL errors is incorrect user agent and proxy settings. Configure your scraper to use a valid user agent and proxy settings to avoid being blocked by websites.

- Use a rotating proxy server to avoid IP blocking

- Set a valid user agent to mimic human browsing behavior

- Configure proxy authentication and rotation settings

Best Practices for User Agent and Proxy Settings

To avoid Scrape URL errors, follow best practices for user agent and proxy settings:

- Use a diverse set of user agents to mimic human browsing behavior

- Rotate proxy servers regularly to avoid IP blocking

- Use proxy authentication to ensure secure connections

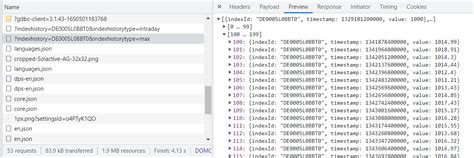

Fix 3: Handle JavaScript Rendering Issues

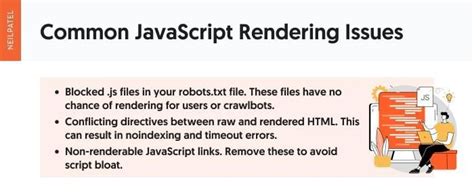

Some websites use JavaScript to load content dynamically, which can cause Scrape URL errors. Handle JavaScript rendering issues by using a headless browser or a JavaScript rendering engine.

- Use a headless browser like Puppeteer or Selenium

- Configure a JavaScript rendering engine like Cheerio or jsdom

- Set a timeout for JavaScript rendering to avoid errors

Best Practices for Handling JavaScript Rendering Issues

To avoid Scrape URL errors, follow best practices for handling JavaScript rendering issues:

- Use a headless browser to mimic human browsing behavior

- Configure a JavaScript rendering engine to handle dynamic content

- Set a timeout for JavaScript rendering to avoid errors

Fix 4: Monitor Website Restrictions and Updates

Websites often update their structure and restrictions, which can cause Scrape URL errors. Monitor website restrictions and updates to stay ahead of changes.

- Monitor website updates and changes to structure

- Check for any restrictions on scraping or access

- Adjust scraper settings to accommodate changes

Best Practices for Monitoring Website Restrictions and Updates

To avoid Scrape URL errors, follow best practices for monitoring website restrictions and updates:

- Use website monitoring tools to track changes

- Check website terms of service for any restrictions

- Adjust scraper settings regularly to accommodate changes

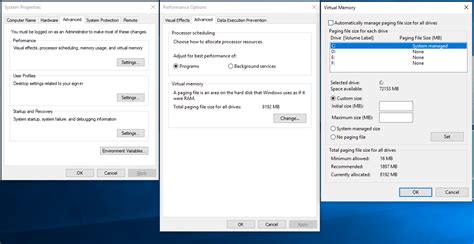

Fix 5: Optimize Scraper Settings and Performance

Finally, optimize scraper settings and performance to avoid Scrape URL errors. Optimize settings for speed, accuracy, and reliability.

- Optimize scraper settings for speed and accuracy

- Use caching and queueing to improve performance

- Monitor scraper performance and adjust settings regularly

Best Practices for Optimizing Scraper Settings and Performance

To avoid Scrape URL errors, follow best practices for optimizing scraper settings and performance:

- Use caching and queueing to improve performance

- Monitor scraper performance regularly

- Adjust settings to optimize speed and accuracy

Scrape URL Error Image Gallery

By following these five fixes, you can resolve Scrape URL errors and improve the performance of your web scraping operations. Remember to verify URL format and structure, configure user agent and proxy settings, handle JavaScript rendering issues, monitor website restrictions and updates, and optimize scraper settings and performance.