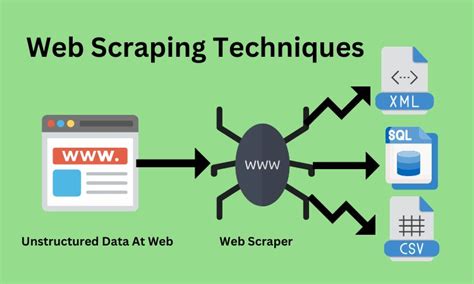

Data scraping, also known as web scraping, is the process of extracting data from websites, web pages, and online documents. With the vast amount of data available on the internet, businesses, researchers, and individuals can benefit from collecting and analyzing this data to gain insights, make informed decisions, and stay competitive. One of the most popular ways to store and analyze scraped data is in Microsoft Excel. In this article, we will explore five ways to scrape data from a website into Excel.

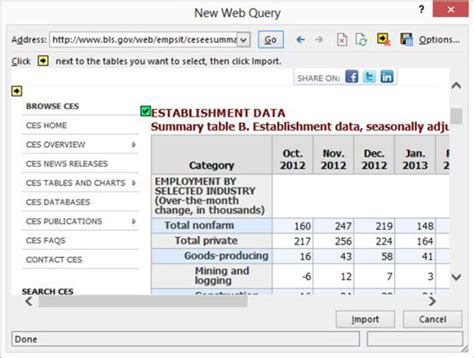

Method 1: Using Excel's Built-in Web Query Tool

Excel's built-in Web Query tool allows you to import data from a website directly into your spreadsheet. This method is easy to use and doesn't require any programming knowledge. To use the Web Query tool, follow these steps:

- Open Excel and go to the "Data" tab.

- Click on "From Web" in the "Get & Transform Data" group.

- Enter the URL of the website you want to scrape and click "OK."

- Select the table or data you want to import and click "Load."

Pros and Cons of Using Excel's Web Query Tool

Pros:

- Easy to use, no programming knowledge required.

- Fast data import.

- Supports multiple data formats.

Cons:

- Limited control over data scraping process.

- May not work with complex or dynamic websites.

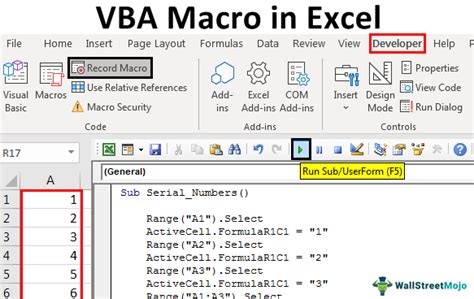

Method 2: Using VBA Macros

VBA (Visual Basic for Applications) macros are a powerful way to automate data scraping in Excel. With VBA, you can create custom scripts to extract data from websites, handle complex data formats, and even interact with web pages. To use VBA macros, follow these steps:

- Open the Visual Basic Editor in Excel by pressing "Alt + F11" or by navigating to "Developer" > "Visual Basic."

- Create a new module by clicking "Insert" > "Module."

- Write your VBA script to scrape data from the website.

- Run the macro by clicking "Run" or by pressing "F5."

Pros and Cons of Using VBA Macros

Pros:

- High degree of control over data scraping process.

- Can handle complex or dynamic websites.

- Can be automated to run at regular intervals.

Cons:

- Requires programming knowledge.

- Can be time-consuming to write and debug scripts.

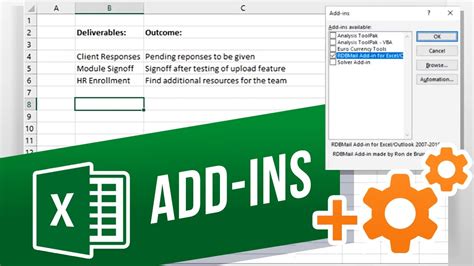

Method 3: Using Excel Add-ins

Excel add-ins are third-party tools that can be installed to enhance Excel's functionality. There are several add-ins available that can help with data scraping, such as Power Query, Power BI, and others. To use an Excel add-in, follow these steps:

- Search for and download an Excel add-in that supports data scraping.

- Install the add-in and restart Excel.

- Follow the add-in's instructions to scrape data from a website.

Pros and Cons of Using Excel Add-ins

Pros:

- Easy to use, no programming knowledge required.

- Can handle complex or dynamic websites.

- Often includes additional features, such as data analysis and visualization.

Cons:

- May require purchase or subscription.

- Can be resource-intensive and slow down Excel.

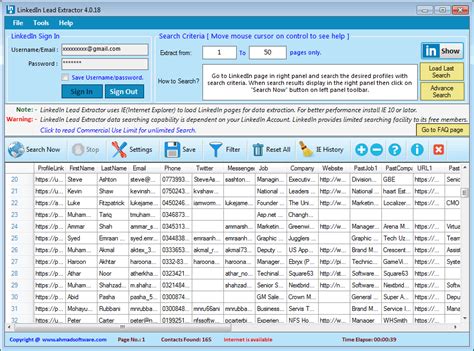

Method 4: Using Online Data Scraping Tools

There are several online data scraping tools available that can extract data from websites and export it to Excel. These tools often provide a user-friendly interface and don't require programming knowledge. To use an online data scraping tool, follow these steps:

- Search for and sign up for an online data scraping tool.

- Enter the URL of the website you want to scrape and select the data you want to extract.

- Choose the export format, such as Excel or CSV.

- Download the extracted data.

Pros and Cons of Using Online Data Scraping Tools

Pros:

- Easy to use, no programming knowledge required.

- Fast data extraction.

- Often includes additional features, such as data analysis and visualization.

Cons:

- May require purchase or subscription.

- Can be limited in terms of data extraction capabilities.

- May not support complex or dynamic websites.

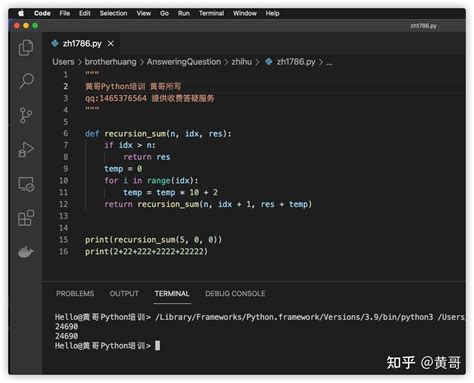

Method 5: Using Python Scripts

Python is a popular programming language that can be used to scrape data from websites. With Python, you can create custom scripts to extract data from websites, handle complex data formats, and even interact with web pages. To use Python scripts, follow these steps:

- Install Python and a library, such as BeautifulSoup or Scrapy.

- Write your Python script to scrape data from the website.

- Run the script using a Python interpreter.

- Export the extracted data to Excel.

Pros and Cons of Using Python Scripts

Pros:

- High degree of control over data scraping process.

- Can handle complex or dynamic websites.

- Can be automated to run at regular intervals.

Cons:

- Requires programming knowledge.

- Can be time-consuming to write and debug scripts.

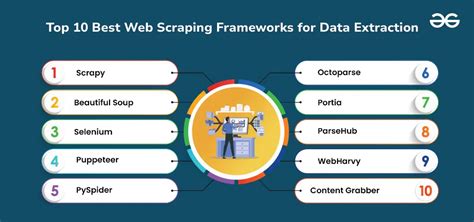

Data Scraping Tools Image Gallery

In conclusion, there are several ways to scrape data from a website into Excel, each with its own pros and cons. The method you choose depends on your specific needs, programming knowledge, and the complexity of the website you want to scrape. By using one of these methods, you can unlock the power of data scraping and gain valuable insights to inform your business decisions.