Intro

Unlock the power of web data scraping into Excel with our expert guide. Discover 5 efficient methods to extract data from websites, including HTML parsing, APIs, and web scraping tools. Learn how to automate data collection, handle anti-scraping measures, and leverage Excel for data analysis and visualization. Start scraping today!

In today's data-driven world, web scraping has become an essential tool for extracting valuable information from websites and converting it into a usable format. Excel, being one of the most widely used data analysis tools, is often the preferred destination for scraped data. In this article, we'll explore five ways to scrape web data into Excel, making it easier for you to harness the power of web scraping for your data analysis needs.

Web scraping, also known as web data extraction, is the process of automatically extracting data from websites, web pages, and online documents. This technique is useful for collecting data from websites that do not provide a public API or a straightforward way to download the data in a structured format.

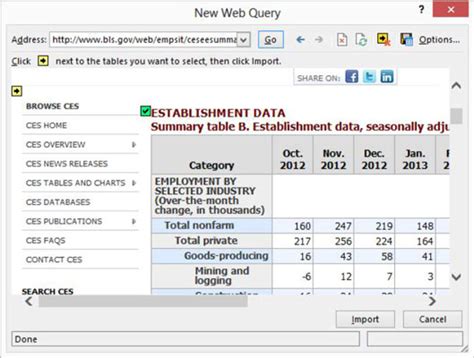

Method 1: Using Excel's Built-in Web Query Tool

Excel has a built-in web query tool that allows you to import data from websites directly into your spreadsheet. To use this feature, follow these steps:

- Go to the "Data" tab in your Excel ribbon

- Click on "From Web" in the "Get & Transform Data" group

- Enter the URL of the webpage you want to scrape

- Select the table or data you want to import

- Click "Load" to import the data into your Excel sheet

This method is straightforward and easy to use, but it has limitations. It only works for websites that provide data in a structured format, such as tables, and may not work for websites that use JavaScript or have complex web pages.

Advantages and Limitations

- Advantages: Easy to use, built-in feature, no coding required

- Limitations: Limited to structured data, may not work for complex web pages, no support for JavaScript

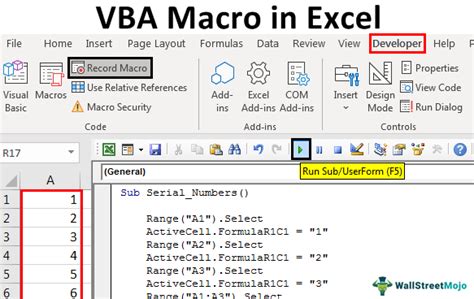

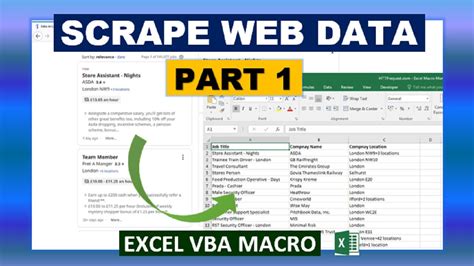

Method 2: Using VBA Macros

VBA (Visual Basic for Applications) macros are a powerful tool for automating tasks in Excel. You can use VBA macros to scrape web data by sending HTTP requests to websites and parsing the HTML responses. To use VBA macros for web scraping, follow these steps:

- Open the Visual Basic Editor in Excel by pressing "Alt + F11" or navigating to "Developer" > "Visual Basic"

- Create a new module by clicking "Insert" > "Module"

- Write your VBA code to send HTTP requests and parse the HTML responses

- Run the macro by clicking "Run" or pressing "F5"

This method requires some programming knowledge and can be time-consuming to set up, but it provides more flexibility and control over the web scraping process.

Advantages and Limitations

- Advantages: Flexible, customizable, can handle complex web pages

- Limitations: Requires programming knowledge, time-consuming to set up, may not work for websites that use JavaScript

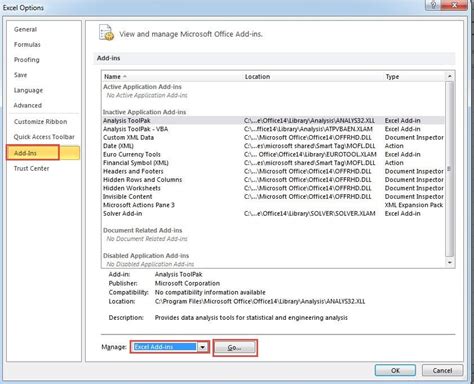

Method 3: Using Third-Party Add-ins

There are several third-party add-ins available for Excel that provide web scraping functionality. These add-ins can simplify the web scraping process and provide more features and flexibility than the built-in web query tool. Some popular third-party add-ins for web scraping include:

- Import.io

- ParseHub

- Scrapy

To use a third-party add-in, follow these steps:

- Install the add-in by downloading and installing it from the vendor's website

- Activate the add-in in Excel by navigating to "File" > "Options" > "Add-ins"

- Use the add-in's interface to configure the web scraping settings and import the data into your Excel sheet

This method provides more features and flexibility than the built-in web query tool, but may require a subscription or license fee.

Advantages and Limitations

- Advantages: Provides more features and flexibility, easy to use, supports complex web pages

- Limitations: May require a subscription or license fee, may have limitations on the amount of data that can be scraped

Method 4: Using Python Libraries

Python is a popular programming language for web scraping, and there are several libraries available that provide web scraping functionality. Some popular Python libraries for web scraping include:

- BeautifulSoup

- Scrapy

- Requests

To use a Python library for web scraping, follow these steps:

- Install the library by running "pip install" in your command prompt or terminal

- Write your Python code to send HTTP requests and parse the HTML responses

- Run the code by executing the Python script

This method requires some programming knowledge and can be time-consuming to set up, but it provides more flexibility and control over the web scraping process.

Advantages and Limitations

- Advantages: Flexible, customizable, can handle complex web pages

- Limitations: Requires programming knowledge, time-consuming to set up, may not work for websites that use JavaScript

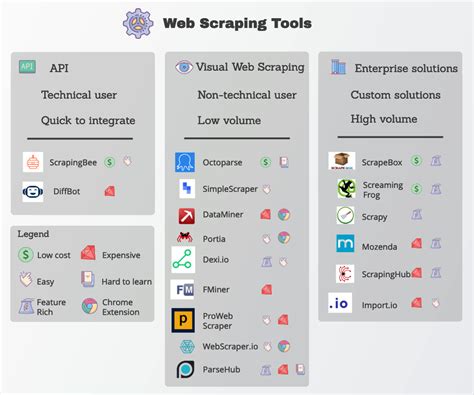

Method 5: Using Online Web Scraping Tools

There are several online web scraping tools available that provide a user-friendly interface for scraping web data. These tools can simplify the web scraping process and provide more features and flexibility than the built-in web query tool. Some popular online web scraping tools include:

- Import.io

- ParseHub

- Diffbot

To use an online web scraping tool, follow these steps:

- Sign up for an account on the tool's website

- Configure the web scraping settings using the tool's interface

- Run the web scraping job and download the data into your Excel sheet

This method provides more features and flexibility than the built-in web query tool, but may require a subscription or license fee.

Advantages and Limitations

- Advantages: Provides more features and flexibility, easy to use, supports complex web pages

- Limitations: May require a subscription or license fee, may have limitations on the amount of data that can be scraped

Web Scraping Image Gallery

We hope this article has provided you with a comprehensive guide to scraping web data into Excel. Whether you're a beginner or an advanced user, there's a method that suits your needs. Remember to always check the website's terms of service and robots.txt file before scraping data, and to handle the data responsibly and ethically. Happy scraping!