Intro

Streamline your monitoring with these 5 expert-crafted Grafana alert template examples. Discover how to create custom alerts for Prometheus, InfluxDB, and more. Learn to optimize your dashboard notifications, reduce alert fatigue, and enhance observability with our actionable template tutorials and best practices.

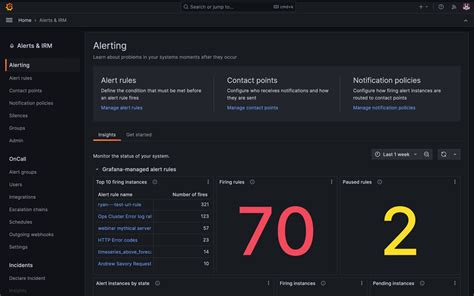

Grafana is a powerful visualization tool used for monitoring and observability. One of its key features is the ability to create custom alerts, which enable users to receive notifications when specific conditions are met. These alerts can be crucial for ensuring the health and performance of applications, infrastructure, and services. In this article, we will delve into the world of Grafana alerts by providing five detailed examples of alert templates that cover various scenarios. These examples will help you understand how to create effective alerts tailored to your specific needs.

Understanding Grafana Alerts

Before diving into the examples, it's essential to understand the basics of Grafana alerts. Grafana integrates with various data sources, such as Prometheus, Loki, and Graphite, to fetch data and display it on dashboards. Alerts are defined within these dashboards, allowing users to specify conditions under which notifications should be sent. These conditions can be based on threshold values, absence or presence of data, or even complex queries that aggregate data across multiple sources.

Configuring Alerts in Grafana

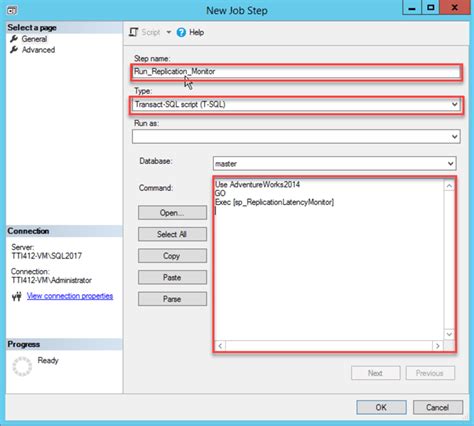

To create an alert in Grafana, navigate to your dashboard and follow these steps:

- Click on the panel for which you want to create an alert.

- Go to the "Alert" tab.

- Define your alert condition using the query builder or a custom query.

- Specify the notification channels through which you want to receive alerts, such as email, Slack, or PagerDuty.

Example 1: CPU Usage Alert

A common use case for Grafana alerts is monitoring CPU usage. Here’s how you might configure an alert for high CPU usage:

- Condition:

avg(cpu_usage{job="your_job", mode="idle"}) > 0.5 - This condition will trigger an alert if the average CPU usage (in idle mode) for a specific job exceeds 50%.

Tips for CPU Usage Alerts

- Ensure the job label (

your_job) matches the actual job name in your Prometheus configuration. - Adjust the threshold value (

0.5) according to your specific requirements.

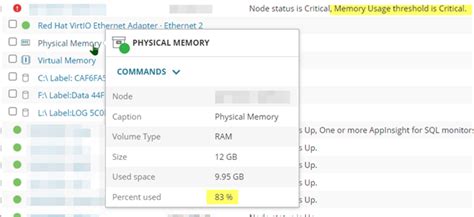

Example 2: Memory Usage Alert

Another crucial metric to monitor is memory usage. An alert for memory usage could be configured as follows:

- Condition:

avg(memory_usage{job="your_job", mode="used"}) > 0.7 - This condition triggers an alert if the average used memory for a specific job exceeds 70%.

Tips for Memory Usage Alerts

- Similar to CPU usage, adjust the job label and threshold value as necessary.

- Consider adding a delay to avoid temporary spikes triggering the alert.

Example 3: Disk Space Alert

Monitoring disk space is vital to prevent data loss and ensure smooth operations. A disk space alert might look like this:

- Condition:

avg(disk_usage{job="your_job", device="/dev/sda1"}) > 0.8 - This triggers an alert if the average disk usage for a specific device (

/dev/sda1) exceeds 80%.

Tips for Disk Space Alerts

- Ensure the device label matches the actual device in your system.

- Regularly review and adjust the threshold as disk usage patterns change.

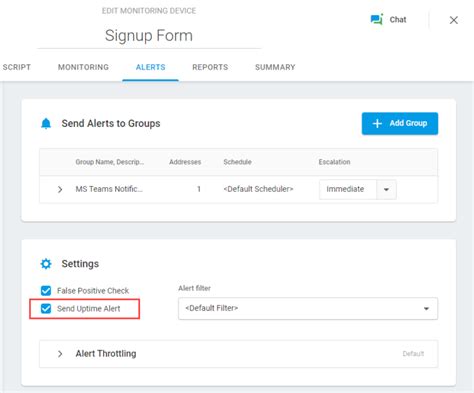

Example 4: Uptime Alert

Uptime is a critical metric for service availability. An alert for low uptime could be configured as follows:

- Condition:

avg(up{job="your_job"}) < 0.99 - This condition triggers an alert if the average uptime for a specific job falls below 99%.

Tips for Uptime Alerts

- Adjust the threshold value according to your service level agreements (SLAs).

- Consider creating a dashboard for visualizing uptime trends.

Example 5: Request Latency Alert

For applications, monitoring request latency is essential for user experience. An alert for high request latency might look like this:

- Condition:

avg(request_latency{job="your_job", quantile="0.99"}) > 500 - This triggers an alert if the 99th percentile of request latency exceeds 500 milliseconds.

Tips for Request Latency Alerts

- Adjust the quantile and threshold value based on your application’s performance requirements.

- Consider segmenting latency by endpoint or user agent for more detailed insights.

Grafana Alert Templates Gallery

Final Thoughts on Grafana Alert Templates

Creating effective alert templates in Grafana requires a deep understanding of your system's performance metrics, potential bottlenecks, and critical thresholds. By following the examples provided in this article, you can establish a robust monitoring system that notifies you of issues before they escalate into major problems. Remember, the key to successful alerting is to strike a balance between sensitivity and specificity, ensuring you receive timely notifications without being overwhelmed by false positives.

Now that you have explored these Grafana alert template examples, consider applying these concepts to your own monitoring setup. Share your experiences, tips, or questions in the comments below, and don’t hesitate to reach out if you need further assistance in crafting the perfect alerts for your unique environment.